The research paper introduces IBM’s Granite 3.0, a set of state-of-the-art language models that cater to enterprise needs by supporting multilingual capabilities, code generation, function calling, and safety compliance. Spanning from 400 million to 8 billion parameters, Granite models are optimized for on-premise and on-device deployments, providing flexibility in resource requirements and performance levels. These models, released under an Apache 2.0 license, aim to promote open access for both research and commercial uses. The development process includes a robust data curation framework aligned with IBM’s AI ethics principles, featuring data from diverse sources that meet governance, risk, and compliance standards.

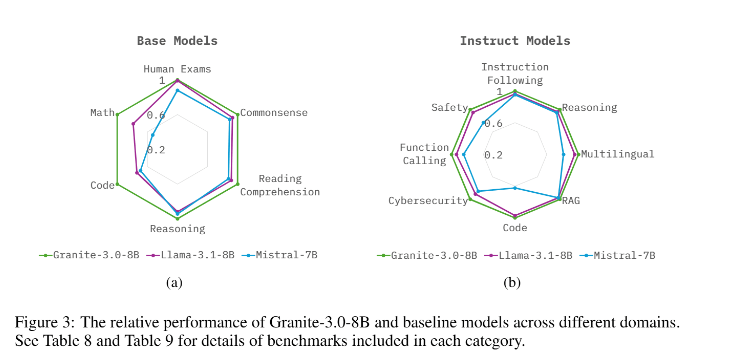

Granite 3.0 models come in dense and mixture-of-expert (MoE) versions, each trained with billions of tokens. They achieve impressive accuracy on benchmark tests across domains such as reasoning, code generation, and cybersecurity. IBM enhances Granite’s alignment with human values through reinforcement learning and best-of-N sampling, ensuring high performance on instruction-following tasks. IBM’s dedication to transparency, safety, and enterprise applicability makes Granite 3.0 a significant tool for AI-driven solutions in regulated industries and other mission-critical environments.